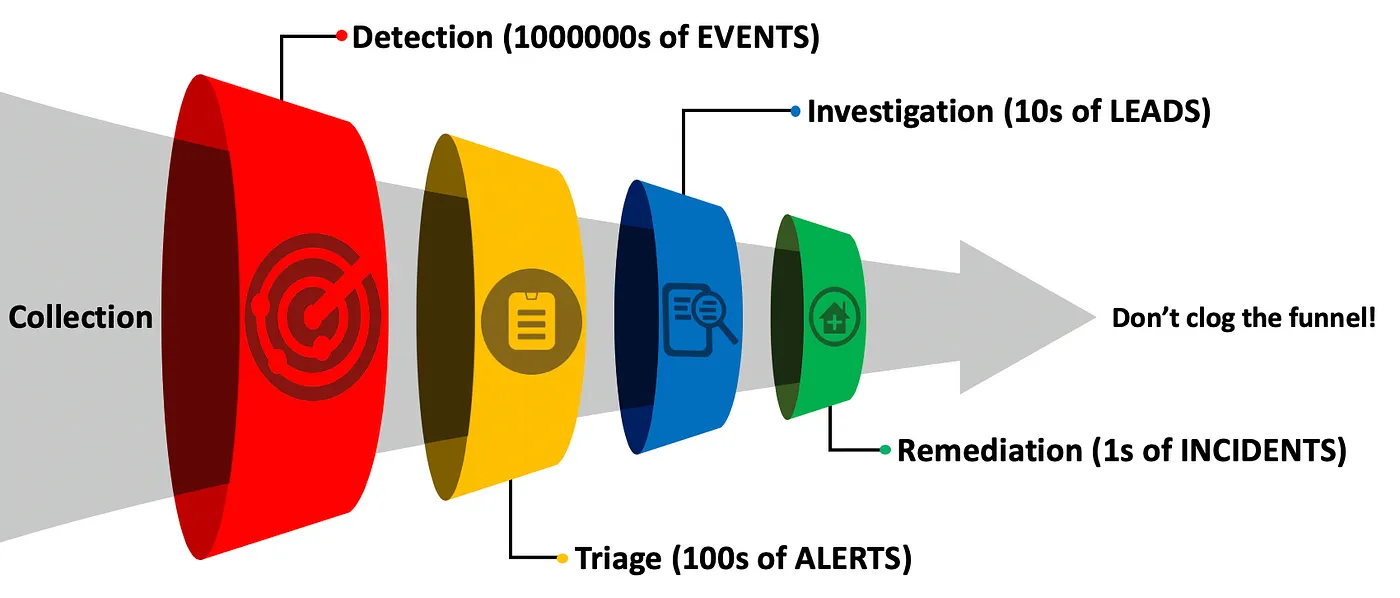

Funnel of Fidelity

The Funnel of Fidelity is a way to model the detection and response process of an organization.

It is segmented into the following five steps, each filtering away more and more noise.

- Collection

- Detection

- Triage

- Investigation

- Remediation

But all of these steps are also dependent on the previous's step to be done properly, otherwise it will hinder or disrupt the subsequent steps.

- An organization does not have a SIEM with centralized telemetry collection. As a result, there aren’t any events to build detections around, which results in a clogged funnel.

- An organization has a functional level of centralized telemetry collection, but has not created any detections. As a result, there are no alerts being produced, which limits the triage and investigation process resulting in a clogged funnel.

- An organization has great centralized telemetry collection, a robust detection engineering process, and really strong triage procedures; but they don’t have an incident response plan. As a result, they can detect an attack, but cannot remediate it reliably resulting in a clogged funnel.

Collection

- Input: Data Sensors

- Output: Events

Mature organizations should strive to centralize as much telemetry as possible to enable enterprise detection activities. The collection phase gathers events from data sensors (Windows event logs, commercial EDR solution, proxy logs, netflow, etc.) and makes them available for the detection stage.

Detection

- Input: Events

- Output: Alerts

With events being collected in a centralized fashion, detection engineers define detection logic, using the Detection Development Lifecycle to identify events that are security relevant. The goal of the detection stage is to reduce the millions or billions of events from the collection stage to hundreds or even thousands of alerts, which will be analysed during the triage stage.

These detections are created in an interactive process often referred to as Threat Hunting or Detection Engineering, but should be implemented in production through an automated process where detection logic is applied to events to generate alerts, achieving Detection as Code.

Triage

- Input: Alerts

- Output: Leads

Alerts are the result of detection logic, but it is reasonable to expect some amount of false positives. The triage stage is where Incident Response categorizes alerts as known bad (malicious), known good (benign), and unknown activity. Malicious activity is immediately identified as an incident and moved to the remediation stage, while unknown activity is identified as a lead and sent to the investigation stage as it requires additional scrutiny.

Investigation

- Input: Leads

- Output: Incidents

The triage stage works to remove false positives from the pipeline and results in a manageable number of leads (likely in the single or double digits). A lead is an activity that cannot be identified as malicious or benign and thus requires additional investigation. The investigation stage is used to collect additional context that may not be available during the detection or triage phases. This may involve more manual / less scalable analysis such as file system analysis, memory forensics, binary analysis, etc. to help identify the true source of the activity. This additional scrutiny is possible because of the reduction in noise that occurred during the previous stages.

Remediation

- Input: Incident

- Output: N/A

Once an incident is declared, remediation activities must occur. This is the phase where incident responders work to identify the scope of the incident and remove the infection from the network. Many organizations work with third parties to accomplish remediation activities and ensure that they are completed in a timely manner. It is important to practice remediation in non-emergency situations to ensure the plan is sufficient and any issues are worked out.

Relevant Note(s):